Generative AI is currently the hottest topic in the technology industry, with AI centered around large language models sweeping across nearly all sectors. What kind of transformation will the chip industry undergo next? Driven by the AI craze, major tech companies are all vying to capture this new market. Providing the computational power to meet the new demands of Gen AI is the most crucial link, and this is precisely what NVIDIA is doing.

Within 15 months, the market value of NVIDIA soared sixfold to exceed US$2.2 trillion, becoming the world's third-largest technology company after Apple and Microsoft, and it continues to grow. To solidify its market position as the preferred supplier for artificial intelligence companies, Nvidia released a new generation of Blackwell GPU architecture for running artificial intelligence models at the GTC 2024. In addition, it will further expand its downstream industry chain through NIM and Omniverse.

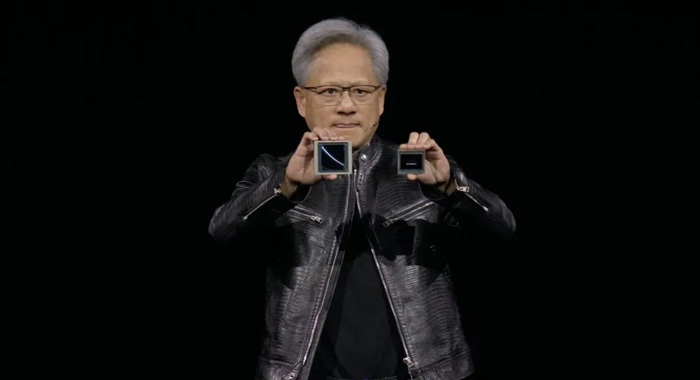

In the two-hour opening speech, NVIDIA founder Jensen Huang showed the world about NVIDIA's new engine: hardware, software, and services under the new computing architecture.

• The new Blackwell GPU architecture and GB200 'Superchip', will provide 4 times the training performance of Hopper, with large model parameters reaching the trillion level.

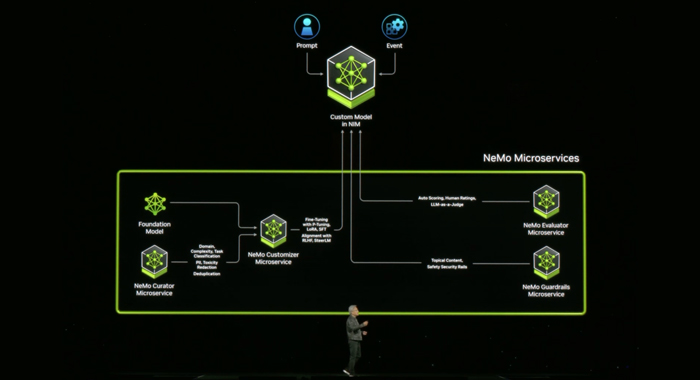

• The innovative software NIM makes it easier for companies to customize large models on the NVIDIA platform.

• Omniverse Cloud integrates the training and application of robotics, autonomous driving, and digital twins in one platform.

• Custom model development software Nemo, and AI factory.

Blackwell B200 GPU And GB200

Blackwell is named after mathematician David Harold Blackwell. It is a new generation of GPU architecture following the Hopper architecture. GB200 is the first Blackwell-based chip and will be available by the end of this year.

Blackwell B200 GPU VS Hopper (Source from NVIDIA)

Blackwell GPUs will be available as standalone GPUs, or two Blackwell GPUs can be combined and paired with Nvidia's Grace CPU to create a Superchip, the GB200.

GB200 connects two B200 GPUs to the Grace CPU through a 900GB/s ultra-low power inter-chip interconnect. Nvidia says the system can deploy models with 27 trillion parameters. CPT-4 reportedly has 1.7 trillion parameters.

To achieve ultra-high AI performance, systems equipped with GB200 can connect to NVIDIA Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms. Both platforms provide high-performance networking at speeds up to 800Gb/s.

Nvidia says the GB200 delivers a 30x improvement in performance for inference workloads compared to the current H100 GPU.

In addition, Nvidia also released the GB200 NVL72 liquid-cooled rack system. It is composed of 36 sets of Grace CPUs and 72 sets of Blackwell GPUs. On the GB200 NVL72, the inference computing power can reach 1440 PFLOPS, and the maximum transmission volume can reach 260TB per second. In addition, the FP8 precision training computing power can reach 720PFlops, which is almost equivalent to a supercomputer cluster.

DGX GB200 NVL72

Blackwell GPU and GB200 superchip will be introduced into the cloud platform NVIDIA DGX B200 system for model training, fine-tuning, and inference. All NVIDIA DGX platforms include NVIDIA AI Enterprise software for enterprise-grade development and deployment.

Jensen Huang said that Nvidia plans to use Blackwell to enter artificial intelligence companies around the world in the future, and sign contracts with all OEMs, regional clouds, national sovereign AI, and telecommunications companies.

Amazon, Dell, Google, Meta, Microsoft, OpenAI, and Tesla have all planned to use Blackwell GPUs.

NIM (Nvidia Inference Microservice)

If an enterprise wants to use a large model, it usually needs fine-tuning and privatized deployment to help its business. At this conference, Nvidia launched its model customization service, NIM (Nvidia Inference Microservice).

First of all, this digital box has a variety of related models to choose from, including open-source models Llama from its partners. According to NVIDIA, they have been optimized based on NVIDIA hardware. Whether the hardware is a laptop with only one CPU or a company-level one with multiple GPU nodes, both can be used directly.

NIM

Additionally, it helps users fine-tune large models. For example, the NVIDIA NeMo Retriever technology announced by NVIDIA in November 2023 is integrated into the entire microservice, and can help enterprises activate RAG functions. Besides, it also includes a Lemo retriever to help with quick retrieval of information, digital human microservices to help users create digital humans, and so on.

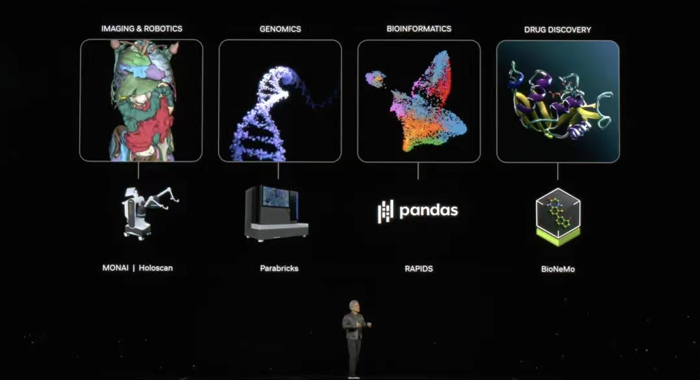

In medical applications, NVIDIA has launched 25 new microservices based on medical scenarios to help global healthcare companies improve efficiency based on generative AI. These microservices include a range of drug discovery models, including MoIMIM for generative chemistry, ESMFold for protein structure prediction, and DiffDock to help researchers understand how drug molecules interact with targets.

NVIDIA Healthcare

Healthcare industry companies can use these microservices to screen trillions of drug compounds, collect more patient data to aid early disease detection, or implement smarter digital assistants, among other things.

NVIDIA gave an example where NVIDIA's microservices can speed up variant calling in a genome analysis workflow by more than 50 times compared to running on a CPU. Some start-ups have built a medical clinical dialogue platform driven by artificial intelligence. The platform will chat with patients over the phone to arrange appointments, pre-operative appointments, discharge follow-up, etc., which will greatly alleviate the widespread staff shortage problem and improve patient treatment outcomes. It also saves clinicians up to three hours.

Currently, nearly 50 application providers around the world are using NVIDIA’s medical microservices.

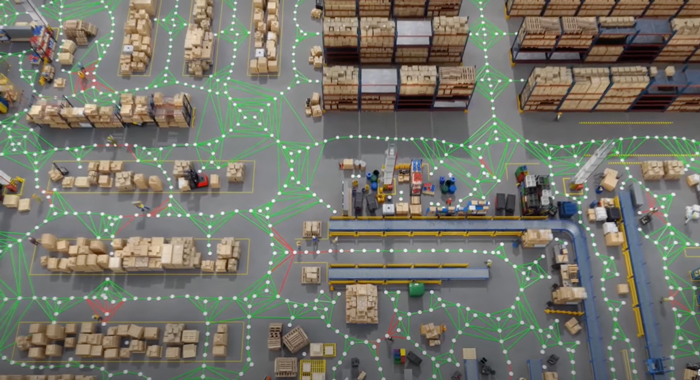

Omniverse - Digital Warehouse And Robots

Omniverse is a truly unified platform for computer graphics, artificial intelligence, technological computing, and physical simulation that NVIDIA is good at. It can achieve a 1:1 digital twin of the environment. Nvidia built Isaac Lab, a robot learning application for training on the Omniverse Isaac simulator. With new compute orchestration services, robots can be trained in physics-based simulations and zero-shot migrated to real-world environments.

Jensen Huang announced Project GR00T, which is a general base model for humanoid robots to develop production robots based on Jetson Thor.

Project GR00T

NVIDIA also showcased a digital warehouse case: an Omniverse simulation environment for a 100,000 square-foot warehouse, which integrates running videos, digital worker AMRs with the Isaac perception stack, and a centralized activity map of the entire warehouse from 100 simulated ceiling-mounted cameras.

Digital Warehouse with Omniverse

In the video, when the planned route of a digital worker AMR is obstructed, NVIDIA Metropolis can change the path planning in real time. Through the generative AI-based Metropolis vision foundation model, operators can even use natural language to ask questions to understand the situation.

NVIDIA announced the creation of an API for Omniverse Cloud, which will be very user-friendly and AI-capable. For example, it can create 3D images of simulation environments directly from natural language scene descriptions.

Furthermore, NVIDIA announced a partnership with Vision Pro, enabling Omniverse Cloud to stream to Vision Pro.

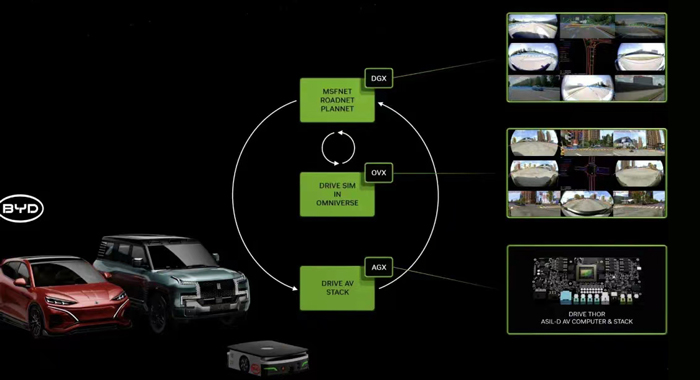

DRIVE Thor Onboard Computing Platform

Compared to the previous generation DRIVE Orin, DRIVE Thor will offer more feature-rich autonomous cockpit performance. It integrates the new NVIDIA Blackwell architecture, which is specifically designed for Transformer, LLM, and generative AI workloads.

Omniverse Cloud + Vision Pro

BYD's collaboration with NVIDIA extends from automobiles to the cloud. In addition to building the next generation of electric vehicle fleets on DRIVE Thor, BYD also plans to use NVIDIA's AI infrastructure for cloud-based AI development and training technologies. Manufacturers like GAC and XPeng have also indicated that they will use the DRIVE Thor onboard computing platform.

Communications & Climate Applications - Digital Twin Cloud Platform

In the communications industry, NVIDIA has launched a 6G research cloud platform. Based on this platform, communications companies can precisely simulate physical terrain and man-made structures, enhancing the reliability of wireless transmission. Its partners include Nokia and Samsung.

Additionally, NVIDIA has introduced the Earth-2 climate digital twin cloud platform for simulating and visualizing weather. Unlike traditional CPU-driven modeling, Earth-2 can provide users with warnings and updated forecasts much faster, in just a few seconds. Furthermore, the climate images it generates have a resolution 12.5 times higher than current numerical models, are 1000 times faster, and have 3000 times more energy efficiency, correcting the inaccuracies of coarse resolution predictions.

6G Research Cloud Platform

Jensen Huang also introduced practical use cases. For example, the Central Weather Bureau of Taiwan plans to use this model to predict the more precise landing location of typhoons, to evacuate people early, and to reduce casualties.

NVIDIA also announced that the new Japanese ABCI-Q supercomputer will be supported by NVIDIA acceleration and quantum computing platforms. This supercomputer, supported by more than 2000 NVIDIA H100 GPUs across over 500 nodes, is the only fully offloadable in-network computing platform in the world and is expected to be deployed early next year.

In the present era, where 'generative AI' represents the future, everyone wants to keep up with the trend. At the 2024 GTC conference, over 10,000 people watched the Keynote live, with over 900 sessions and a hundred exhibitors. Apart from Apple, NVIDIA is one of the few companies that can turn technology into something everyone wants to approach. In line with the trend of the times, while NVIDIA provides computing power for future AI, it also becomes the biggest beneficiary, and it is expected to become the company with the highest market value in the world.